The BP Texas City Oil Refinery disaster in 2005 shocked the world. With many safe-guards in place and lost-time injury rates so low, how could so many people be killed in a wholly preventable disaster?

Transcript available

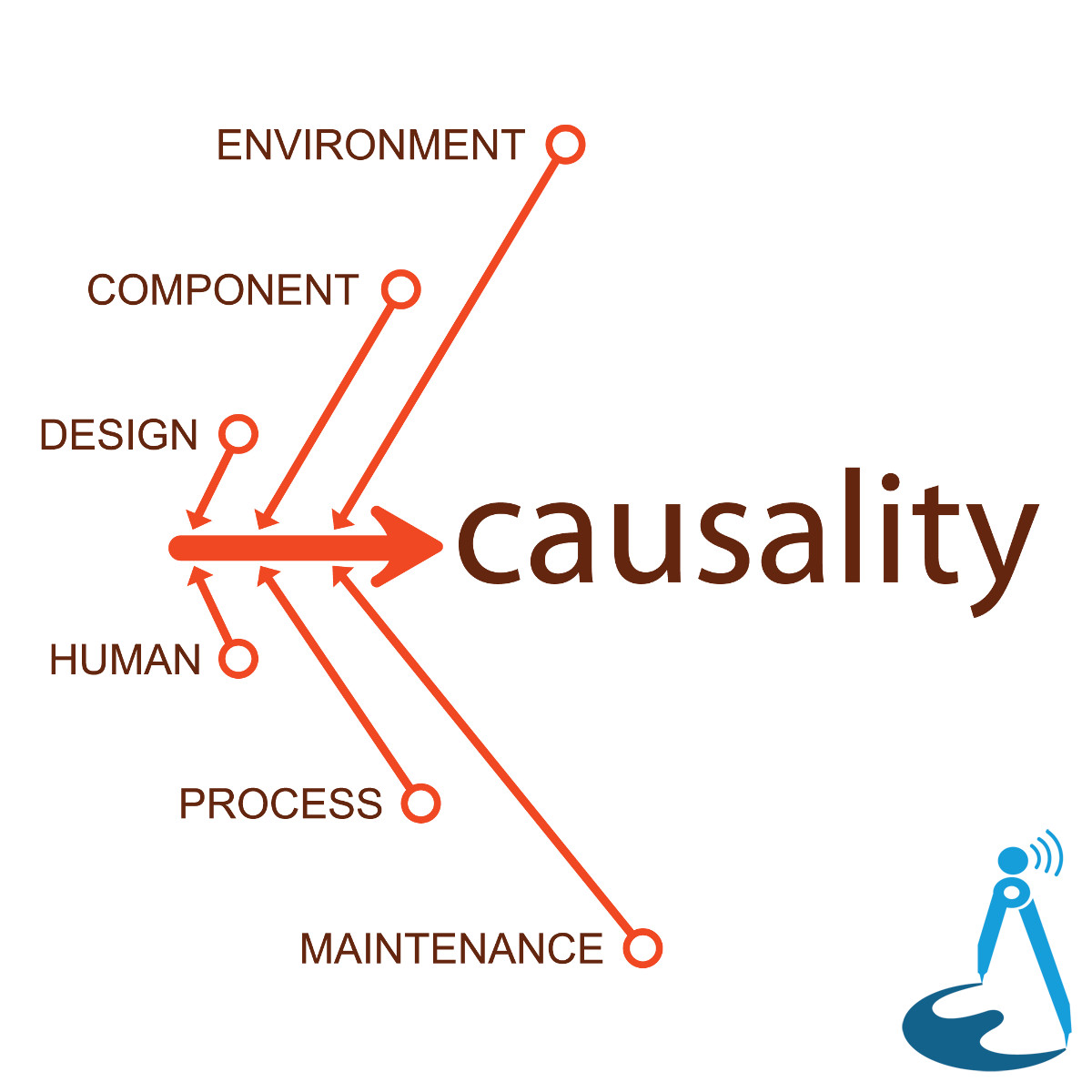

Chain of events, cause and effect. We analyze what went right and what went wrong as we discover that many outcomes can be predicted, planned for, and even prevented. I'm Jon Chidgey and this is Causality. Causality is part of the Engineered Network. To support our shows including this one, head over to our Patreon page and for other great shows visit https://engineered.network. today. For the first episode of Causality, I wanted to talk about a disaster that has greatly affected me and my professional career. Not because I'm currently working in the oil and gas industry for the last three years, but more from the point that it's had a profound impact on the way I see safety, safety engineering, and user interface design, particularly in SCADA systems, because that played a part in this disaster. So today we're going to talk about the BP refinery at Texas City, the disaster in 2005. Specifically at 1:20pm on Wednesday, March 23, 2005, BP's refinery in Texas City in Texas just outside of Galveston. There was a massive explosion in the ISOM unit in one of the ISOM units. 15 people were killed. 180 were injured, most of them seriously injured requiring lengthy hospitalization. The blast was about three quarters of a mile or two kilometers away from the site, shattering windows at that distance. 43,000 residents were forced to stay indoors until the fire was able to be brought under control. Investigation into the incident lasted for two years and the Chemical Safety Board in the United States at that point in 2005, it was the largest investigation in their entire history. So BP had an oil refinery, it was very, very large, it employed approximately 1,800 staff and it refined about 470,000 barrels of oil a day. So it was actually the largest BP refinery in North America at that point in time. The incident occurred in something called the ISOM unit. ISOM isn't actually an acronym, it's an abbreviation. It's an abbreviation of Isomerisation and that's designed to change the "ISO" configuration of a hydrocarbon from one isoform to another or to separate it out. This is all got to do with Octane ratings and Octane boosting for unleaded gasoline or petrol. The idea is of course when we hydrocarbons starting off with Methane which is CH4, single carbon, four hydrogen atoms surrounding it. As we increase the number of carbon atoms and to create longer and longer hydrocarbon chains becoming ethane with two hydrocarbons and as we add more we get to higher order hydrocarbons like Pentane, Butane, Pentane, Hexane, Pentane, oh I said Pentane, all the way up to Nonane and Octane up there and so on and so forth. The specific part of the ISOM unit where the incident occurred was referred to as the Raffinate tower. Raffinate is called "Raff" for short and what it is is it's essentially a either it's either a not unseparated or partly separated part of the crude oil process, the refining process. And the idea is you have a tall tower, in this particular case, it was about 170 feet tall, that's 52 meters high. And of that height, the majority of that height is supposed to be empty. You inject a small amount, and I say small, about six feet's worth of liquid in the bottom. It's about two meters worth of height of liquid in the bottom of that tower. And as you heat it up, then what tends to happen is the higher density and higher energy hydrocarbons, such as the Octane, Hexane and so on, they will actually come out of solution and as vapors will then accumulate at the top of the tower, which is the reason why the tower is so tall. They need that additional space. So considering it's 170 feet tall, but you only need six feet of liquid in the bottom, It seems a bit odd, but that's the way that it works. And anyway, the idea is that once you have the accumulation at the top, the Pentane, Hexane, whatever you're accumulating at the top, the lighter components then stored in the light Raffinate storage tank and the heavier components in the heavy Raffinate storage tank. The overall volume of this tank is huge. It's about 3,700 barrels worth. So it's quite decent. The investigation into the incident identified that there was organisational and safety deficiencies at all levels of the organisation at that point. But where do we begin getting ahead of ourselves? There was an 11-hour period leading up to the explosion. Several units, ISOM units, were shut down for maintenance works. Over a thousand subcontractors were on site during these works. Now BP had placed 10 portable offices near the Ultra Cracker Unit and several others near other nearby process units for convenience purposes during the maintenance works. Now these particular portable offices if you're not familiar with them are typically a wooden frame and they'll have a light sheet metal on the outside and some insulating paneling on the inside generally fitted with some air conditioning units to keep them cool in the summer and they are essentially arrive in pieces and assembled on site and sometimes you'll see them in the back of a truck going down the street in pieces and these temporary offices are very popular because you have permanent facilities on site, but during construction you need more space because you have more documentation, people coming and going for meetings and so on and it's it's they're basically a great economical way of housing all of the maintenance staff and all of the construction staff during those works. It's a very popular, very common place. Some people refer to them in Australia as "Dongas". Irrespective, these particular buildings were placed where they were because they were physically close, they were approximate to the works being undertaken, so that people could walk, you know, several hundred feet from where they are actually doing the work back to the site office to have a look drawings, have a meeting and then go back out to the site again. So from a convenience point of view that sounded great. Unfortunately however they were only 120 feet or 35 meters away from the base of the blowdown drum which would later become somewhat of a problem. The funny thing is, the odd thing is, if there is an explosion you're actually safer out in in the open atmosphere than you are actually inside a building because rather like a cyclone or a hurricane or a typhoon, whatever you want to call it, or a tornado in that case, it's not so much the explosion, but it's the flying debris that'll end up killing you. Notwithstanding blast pressure, of course, and pressure differential, because that'll also cause kidneys and internal organs to rupture if you're close enough to the actual point of the explosion. But that won't matter if you're inside or outside of a building. I say building, obviously I'm not talking about a concrete bunker. You put a concrete bunker in an explosion, next to an explosion, you're probably going to fare okay if you're inside there. But most of these buildings aren't bomb shelters. So, anyway. The guidelines at the time of the incident, they weren't actually strict enough to disallow temporary structures, but these were amended after this incident occurred. There was actually a management change review when they placed the buildings in the location next to the ISOM unit, but they didn't follow up on several action items from that management change review meeting and they never completed a full risk assessment of a potential explosion during the review meetings. BP's also had its own procedures requiring SIMOPs, Simultaneous Operations is what we call it these days, and an evacuation of those buildings in nearby surrounding areas during the startup and that wasn't followed. So the resulting explosion actually impacted trailers that were 479 feet, which is about 150 meters away. And people in those trailers still resulted in injuries. They still had injuries. Anyway, the closest office was a double wide wooden frame trailer. It had 11 offices in it and they were primarily used for meetings. When the ISOM unit was ready for recommissioning, there was no notice given to any of the users of those buildings, anyone in that area that was about to start up. So they'd done their works, the maintenance was complete, and it was time to turn this thing back on and, well, essentially fire it up, quite literally. So at 2:15am, the overnight operators injected some Raffinate into the splitter tower with the level transmitter being mounted at the bottom of the unit to measure that 6 foot operational level I mentioned earlier. The tower unit had a maximum liquid operating level of about 9 feet, which is just under 3 meters, that's measured from the bottom of the tank. The normal operational level, as I said before, was about 2 meters or 6 feet. But during the startup process, that level could fluctuate and lower levels in the tower was thought by the operators at the time could cause damage to the furnace or the burners. So the idea is we heat up the liquid in an external set of burners and these burners as they warm up the the Raffinate it's then pumped into the base of the tower and circulated through. Once it's in the towers of course the the vapors are then extracted off the top. But the problem is the relative level of the burner was not quite low enough or rather relative to the bottom of the base of the tank. So if the level of the base of the tank got too low, there was a concern that the level in the burners would also get too low and that that would then cause a subsequent damage to the furnace. What's not clear in the aftermath is actually whether or not that that was true. Irrespective of whether it was true, it was never thoroughly investigated during the lead up as to whether or not it was actually a problem or if it was just a perceived problem. You know, the whole idea of the monkeys and the banana in a cage. You train three people, three of the monkeys, sorry, people, monkeys. You train three monkeys with the fire hose. Don't go up and don't get the banana. You walk up to the banana, you spray them with the fire hose, you do that, all three of them over and over again. And after a while they realize, "Well, we don't get the banana or we get sprayed by the hose." You bring a fourth person in, a fourth monkey into the cage, the other three will stop that fourth monkey from going to get the banana. And that's an institutional learning thought experiment. No monkeys were harmed in the making of that analogy. Anyway, so rather than investigate that, they simply deviated from the documented procedure. The documented procedure said not to fill it beyond the normal operational level of 6 feet. Instead, they went to the 9 feet because that's just the way that they had learned to do it because they were concerned it may damage the burner unit. At 3:09am, the audible high level alarm went off at the 8 foot mark. That's driven by the level transmitter of course mounted on the side. A level transmitter measures the level in an analog sense. 0% up to 100% probably at 9 feet of operational level. However, for redundancy purposes, they had fitted a secondary fixed position high level alarm, so a level switch essentially, and it was set just above the analog high level set point, so just above that 8 foot mark. However, that high level alarm never went off. Now, after the incident had occurred, they found that the level switch had actually been listed as faulty as early as two years prior. The worst part of it, the maintenance work orders that had been raised to address that issue, each time that maintenance came around, they identified it was faulty, but then they closed the work order each time it had been reported and never actually repaired it. There's no evidence as to why they did that. By 3:30am in the morning, level indicator was showing a full level of nine feet of liquid. And the operators shortly thereafter noticing this stopped filling the tower at that point. During their investigation, the Chemical Safety Board found that the level had actually reached as high as 13 feet. That's four meters, which is four feet above the actual maximum level. And the operators were not aware of this because the level transmitter only reported a maximum of nine feet of level. Beyond that, there was no sensing. And the high level switch wasn't working. So they had no way of knowing if it was 9.1 feet or 900 feet. It's not possible to know. You may ask yourself, why on earth would you do that? Why would you set the operational level of a level transmitter to such a small fraction of the overall height of the tower? Normally you do that to provide better resolution. So you'd say, I would like more accuracy because I'm turning this into a 16-bit integer. So I have, you know, let's say 0 to 32,767 discrete points that I can indicate my range. You know, convert that into a percentage and it's, you know, maybe one decimal point, maybe two tops. I don't know, sometimes you want as best as much resolution as possible. If you were to take the same level, scale it over the entire 170 feet, the resolution would be much less, significantly less. However, at least that would have the virtue of knowing exactly how much liquid was in it. Clearly, when they did the design, they didn't think that was a problem. So at this point in time, the startup procedure, the restart procedure, whatever you want to call it, for this particular ISOM unit was being handled by the lead operator, but they were in a secondary control room. And this is still the night shift operator. At 5:00am, the handover and the status of the restart with the operator that was currently on duty in the main control room, one hour before the end of the shift was due to finish, was essentially done over the radio. So the duty operator in the main control room did take enough time to make a note before they left at 6am. So at 6:00am the day shift operator came into the main control room. There was a brief handover. Now he was working day 30 in a row. So he'd done 29 days in a row at that point. This was now day 30 and each day 12 hour days and this is not uncommon during restart maintenance procedures where where there's more work to be done. Sometimes they'll work extended long shifts. Now these days, most industry standard and certainly the company I currently work of is there's a limitation of the maximum 21 days straight. So you can't do more than three weeks straight without several days off because they recognize that long-term that becomes a fatigue issue. There's even stricter rules regarding number of hours in a day. The note that the main control room operator, who let's not forget only had it verbally handed over to him an hour before he left, basically wrote down the logbook, ISOM, and this is exactly what he wrote, he wrote: "...brought in some Raff to unit to pack Raff with," which is kind of, yeah, a bit vague. It didn't say how long, it didn't say what to do with the Raff, It didn't say whether the burners are on or off or if it had been preheated or at what level. Nothing, nothing at all. Just brought in some Raff. Okay. Not particularly descriptive. So, at 7:15am, the day shift supervisor arrived and we note that that was more than an hour late. He was supposed to be there at six o'clock for the handover. Ordinarily, and certainly in my experience, verbal face-to-face handover is a requirement, such that when the day shift operator comes in, they speak face-to-face and go through the list of issues, what's going on with the night shift operator before the night shift operator is allowed to leave. Sometimes when day shift operators are late, the night shift operator simply is not allowed to leave until there is a handover, which makes sense, seems reasonable. The problem was that the day shift supervisor who had the most experience, because he was over an hour late, unfortunately some of that information was lost and all they had to work with was that one line that was rather vague in the logbook. At 9:51am operators resumed the startup sequence by starting to recirculate the liquid, the Raffinate in the lower loop and they added more liquid to the ISOM unit, thinking that it it still needed to be topped up a bit more. Ordinarily, the level in the tank is controlled by a discharge valve, an automatic level control valve that had been set into manual in a SCADA system. And because the final routing destination for the circulated Raff was not made clear, there was actually conflicting opinions as to where it should go. The operators decided to leave the valve shut for nearly two hours while they continued to put more raft in the tower. Just prior to 10:00am, the furnace was turned on to begin heating up. Just before 11:00am, the shift supervisor was called away on an urgent personal matter, leaving just the one operator in control. Now, this particular operator was nowhere near as experienced as the supervisor who had just had to leave due to personal concerns and there was no one else available to replace them. So this operator on their 30th day straight of this swing was in charge of three refinery units, one of which one of the ISOM units was actually going through the startup sequence, which requires more attention than something that's currently running. Six years previously BP had bought this particular site from Amoco. And when that happened, as part of the merger, they made a decision at a board level to reduce headcount by 25%, essentially a 25, like one quarter, fixed cost reduction across all the refineries. There's this mentality, when you have a merger between two corporate organizations, that there is going to be duplication and role duplication. So of course, what's the first thing you want to do? Well, we're going to cut-back. Why? Well, because there must be a lot of duplication, I guess. Problem is, you don't always let go some of the people that are perhaps not the best performers. Good people are the first to leave usually. And unfortunately, there were some people that were let go that played key roles in that organization. There was a panel investigating it independent of the CSB called the Baker Panel, following this specific incident in investigating it. And they concluded that restructuring following the merger resulted in a significant loss of people, expertise and experience. So at that point, they had cut back from two operators on the panel to one as a part of their 25% cost reduction. A second panel operator, I think would have definitely made a difference. Unfortunately there was just the one and he was fatigued I think it's reasonable to assume. So at this point in time just before 11am there had been no regulation of the level in the tank at all. The indicator still showed an incorrect level and they had no way of knowing that the the amount of Raffinate in that tower had actually reached by lunchtime, it had reached 98 feet. 98. The level transmitter, the particular kind of level transmitter that was used, was actually now starting to go backwards. Some level transmitters that work on pressure, what ends up happening is that they will start to get anomalous readings based on the liquid that's used because the density of the liquid will change as the head pressure changes and that will affect the reading. So what's happened is this particular meter had been calibrated, but it was calibrated against data that had been mapped out in 1975. I presume, although it was not clear from the report when they originally fitted that specific level gauge. The worst part of it though was that it was for a completely different process with a different kind of liquid, meaning that the calibration from data from 1975 was completely wrong anyway. The external sight glass, which is essentially just what it sounds like, a piece of glass that allows you to see through it, and you can visibly see the level in the tank. Well, that sight glass was so dirty, it was completely unreadable. It hadn't been cleaned or maintained. other option, perhaps it was became dirty during the maintenance work but was never clean prior to startup. Now, the particular level transmitter was never tested against a level well above the nine foot mark because that wasn't normal. It wasn't normal to fill the tower that high. That was supposed to be left as free space for the vapor. And now for the SCADA component. When you have a tower, a tank, any kind of vessel that can store liquid, the flow in and the flow out of that tank are critical pieces of information. You put the two together with a level indication and you can very quickly see flow in minus flow out and if your level is changing you can tell if one of those three does not agree. So if you're putting in lots and lots of fluid and and there's no fluid coming out and the level isn't changing, you know something is wrong. Unfortunately, the SCADA screens were designed where the flow in and out of this tower was shown on completely different screens. You couldn't have them up up at the same time. So it was very, very difficult to tell if anything was actually wrong. Had they been on the same screen, it would have been glaringly obvious that something was wrong. And if I'd been designing this thing, it's just normal practice to put the flow in and the flow out either horizontally opposed to each other, one on the left and one on the right-hand side of the tank, or to have them above or below each other so that you can easily visually compare them and deduce based on the level indication or volume indication if something's wrong. In this case, they didn't have that. More advanced alarming and controls might've even included an automatic sanity calculation that actually does track flow in flow out versus volume. I've done something like that in the past. You know, control systems are there to help. So you could do that calculation automatically and make it even easier for the operator. Again, didn't exist in this case. So at around about lunchtime several of the contractors that weren't involved with the recommissioning of the ISOM unit, they were there for different projects going on at the same at the time, they'd left the site for a team lunch and that team lunch was celebrating one month LTI free. LTI is Lost Time Injury. And how's that for a bit of irony for you? At this point now in our chain of events we're up to 12:41pm so just after midday. At that point a high-pressure alarm went off in the top of the tower because the liquid level had now become so high that the gases that were escaping at the top were now compressing and that high pressure should have been an indicator that something was really not right. However the problem was the level reading was still incorrect it was still showing about eight and a half feet and it was dropping because the calibration on the meter was completely wrong despite the fact that there was over a hundred feet of liquid in the tower. So because the reading was incorrect, the operator was confused, why would they be getting a high pressure alarm at the top of the tower? It made no sense. So what they decided to do is the rather obvious thing, which is you open the manual valve, and that's designed to vent high pressures to the emergency relief system. Now, the actual emergency relief system was a little bit antiquated. It's what we refer to as a cold vent and in that particular case a blow-down drum. Same idea. The idea though is it's not flared and a flare essentially is a cold vent with a torch on the end and all we do is as we vent hydrocarbons we put a match to them. It may sound a bit crazy but it's actually oddly a safer thing to do because if you have hydrocarbons escaping cold into the atmosphere if the concentration of those hydrocarbons is just right and they meet an ignition source that's unintended then you will have a fire and a fireball and an explosion and people will probably get killed. In this particular case they were considering putting a flare on the top of this blowdown drum. It was actually built in the 1950s but it was not fitted at this point so it was a cold vent or blowdown drum. So in In addition to opening up the manual release valve that then gave the high pressure gases a secondary path to escape and reduce the pressure at the top of the tower to the blow down drum, what they also did is they also turned off two of the burners in the furnace. And the idea was that by reducing the temperature in the furnace, it'll reduce the temperature of the Raffinate, which would then of course, ultimately reduce the pressure in the top of the tower as well. I mean, that's gonna make little or no difference, essentially because the volume of hot Raffinate in that tower is huge at that point. There had been so much in there that it was just, yeah, it wasn't going to make any difference. At this point in time, they knew something wasn't quite right. They didn't go and check the level of the sight glass, they couldn't have if they wanted to, because it was so dirty, you couldn't tell the difference. They trusted their instrument. But then they started to check their flows. And then they noticed that the flow out of the tower at that point was not right. It was clearly there was something not quite right. So what they decided to do was open a drain valve to some of the Raffinate heavy storage tanks. But the problem was that those drain valves were not designed to operate when the Raffinate was at this sort of temperature. It was a heat exchanger designed to reduce the temperature. But because this liquid essentially was coming off straight after the furnace or not far after that, it ended up actually preheating some of the other liquids by as much as 141°F or 60°C. So unfortunately, that then had a cumulative effect, which we'll see in a minute. At this time, the temperature of the liquid was so high, it began to boil at the top of the tower. The boiling and all of that turbulence then spilled liquid into the vapor line. Outside of the cylinder of the tank, there was essentially a smaller vapor pipe and that pipe was supposed to take the Hexane and higher the more dense hydrocarbon vapors and extract them from the top. That was the design, that was the whole idea of doing it. But now because the liquid was so high it boiled over into that vapor line going back down the outside of the tower and into the vent system. At 1:14pm the three pressure relief valves at the base of the tower opened and that then was the last thing it released the high temperature liquid and vapor into the blowdown drum. The liquid started to fill the blowdown drum and it very, very quickly overflowed through an overflow line into the process sewer. That then set off more alarms in the control room. But another point of interest, there was in the blowdown drum yet another level switch. And that level switch indicates a high level alarm ordinarily, it should never but it's there as a last resort but it did not activate again it was broken just like the other high-level switch the liquid at that point had nowhere else to go but up out the vent stack so at this point in time It was now 1:20pm. Liquid was spraying out of the top of the blowdown stack. Several staff on the ground level described it as a geyser of boiling gasoline and vapor. The vapor cloud spilled from essentially an entire petrol gasoline tanker truck's worth of gasoline out of the top. It took it about 90 seconds to find an ignition source. By the time it found an ignition source, the vapor cloud was encompassing the entire ISOM unit, the demandable trailers nearby, as well as a parking area for vehicles adjacent to the ISOM unit. And that was where it found its ignition source. There were two workers. They were parked 25 feet, it's about, it's only 7 meters away, from the base of the blowdown drum in a diesel powered pickup truck. They were just sitting there idling. Waiting for reasons unexplained, but that's okay. They had no reason to suspect anything was wrong. However, as the engine began to race, because the engine was now sucking in through the air line, it was now sucking in all of this gasoline vapour in addition to the diesel that was being injected into the engine through the normal combustion process through the fuel injectors. They tried to turn the vehicle off but they couldn't. So they got out of the vehicle and they ran, which is exactly what I'd do. The problem with diesels, I say the problem, but one of the the reason that the engine wouldn't shut off is because diesels, they use high compression ratio inside the combustion chamber to ignite the fuel. There's no spark plugs. So the air intake however was now bringing the gasoline vapor in as well as the air and then that was mixing with the diesel from the injectors so if you turn the engine off you turn the ignition off that'll turn off the fuel pump it'll turn off your injectors but since the fuel was no longer coming in just from the fuel pump and the injectors it was actually coming in through the air intake which has no cut off the engine will theoretically will never stop running and that's exactly what happened they couldn't turn off the engine So they ran. In a situation like this, the amount of fuel coming into that engine was so great and the engine raced and essentially went beyond its maximum rev limit. What was happening is the sequencing was no longer going to work properly and some non-combusted fuel escaped down the exhaust. A backfire is when that fuel combusts outside of the combustion chamber and it was a backfire from that engine that ultimately lit the gasoline vapor cloud. When that happened, the force of the explosion killed 12 of the people in the nearest trailer. trailer. Another three people were killed in a nearby trailer. The devastation caused by that explosion took two years to rebuild, not to mention the lives that were lost. So the investigation. There were internal BP reports in the years leading up to that incident. And one of them was tabled to executives in London stating that they had serious concerns about the potential for a major site incident. There were 80, that's 8...0...hydrocarbon releases in the year prior to that alone. 80 and that's a lot. So in other words they were venting situations where they had released hydrocarbons in an unplanned fashion for unplanned reasons. During 2004 there were three major accidents at BP Texas City. March 30th one of the incidents 30 million dollars worth of damage but no fatalities. The most disturbing part of it though, the other two incidents occurred each had one fatality and that was in 2004. I mean when I read that I couldn't believe it but the worst part beyond the fatalities is that the lost time injuries specifically excluded fatalities. I have no idea how that makes any sense. Because if someone's dead, they're not showing up to work, so surely that's lost time. But I suppose the lost time would go on for an infinite period of time. So therefore maybe they decided, well, we'll have a separate measure for casualties at work or something, or just didn't include them in the statistics. That's just mind-blowingly terrible. So they also did some digging into maintenance budgets. We mentioned earlier about the 25% cuts. Well, they increased their funding for maintenance in the 2003-2004 year. But that money was actually primarily directed to environmental compliance retrofitting and accident response because they kept having accidents, not preventative maintenance. Of course preventative maintenance would have helped. One of their own internal safety surveys just prior to the 2005 disaster noted that production and budget compliance gets recognised and rewarded above anything else. And that's just a little disturbing. The only safety metric used to calculate executive bonuses was the personal injury rate from a lost time injury rate. injury rate. So there was no recognition given for preventative maintenance plans and strategies for a reduction in the number of unplanned venting, none of that. And certainly not with fatalities either, which is insane. They also noted the high executive turnover rates in the decade leading up to the incident, There was an average of only 18 months for an executive to stay at that facility. Just 18 months, a year and a half. And since executives are rewarded based on profit, and that's driven by production and reducing spending, there were no major preventative maintenance initiatives implemented during that decade. And BP essentially at that point had developed a culture that many industrial sites do these days are focusing on personal safety like slips, trips, falls and paper cuts of all things. And they are focusing on that rather than process safety, which is to say the automation process safety systems, risk analysis, hazard analysis from a process point of view, as in the plant process. What are we processing? Well, in this case, oil, hydrocarbons. Are they explosive? Yeah, that's, they're a problem from that point of view. Yeah. And the problem is that then that the culture sort of in time, what happens is you get an erosion. You erode away the employees that are focused on process safety, and they get taken over by people that are far more focused on personal safety, like slips, trips and falls. Because in many respects, that's easier for a lot of people to understand than just than process safety, which can be quite complicated if you don't understand if you're not a Chemical, Process Engineer, or a Mechanical or Electrical Engineer, sometimes process safety is very hard to get your head around, because it's more complex. Anyway, over the years, these people sort of were eroded out of the organization, leaving the majority of the high level executives blind to the process safety risks that were present at that facility. Beyond that, BP had an internal culture that didn't reward or acknowledge reporting of potential safety risks. Sometimes we refer to them as near misses. And the Texas City maintenance manager noted in an email in prior to the incident, in an email to executives that BP has a ways to go to becoming a learning culture and away from a punitive culture. I've worked in a few different places where if you raise an issue, it's a defect or something's wrong or potential safety issue, where you get sometimes in extreme cases laughed out of the room. In other cases, you get told you're just being a nitpicky so and so. We have other things to worry about. We have real problems to worry about, you know. And that sort of punitive culture that doesn't encourage people from flagging issues and process safety issues in particular. You know, that's poisonous. I'm happy to say that my current employer is is not like that. My current employer is actually very good at taking that seriously, which is great. In the 19 previous startups for ISOM units at that facility, in the vast majority of instances, the operators were running the ISOM units beyond their capable range of the level transmitter, so above the nine foot mark. But But it was never investigated as to why, because these weren't considered near misses and it became normal operational procedure. Like I said, monkeys and the banana and the fire hose. These days we investigate near misses as thoroughly as though they were an actual serious incident where someone was injured. Because near misses are an indicator of a future problem, where it will no longer be a near miss. So finally, how can we prevent this accident? How could we have prevented this accident? Accident? Disaster? First of all, in safety systems, we recognize a, we do a safety study, and the safety studies are designed to look at all the risk factors and consequences if things go wrong. They incorporate reaction times, damage to the environment, damage, you know, personal injuries, you know, process damage. All of these factors and several more are all rolled up into a series of what we call safety instrument levels, SIL ratings. And the SIL ratings drive the level of protection that's required. In particular, this sort of an application would require redundant level transmitters. A single level transmitter with a level switch would not be sufficient. We would have two or possibly three level transmitters identical mounted nearby independently reporting their positions. They would require 6 to 12 monthly calibration with an independently verified calibration source. But that was not what was installed. Safety interlocking to prevent overfilling would also be a common thing. The pumps that circulated the Raffinate could have been cut off by the high level indicators. If 1oo2, 2oo3 voted, they could have done that. Built into a safety interlocking system. That's what we would have installed had it been designed and built today. Even in the last 10 years. Flaring seems environmentally questionable, but in terms of safety, because it consumes the hydrocarbons carbons as they exit to atmosphere, you prevent that build up that could lead to an explosion. So whether it's environmentally questionable one way or the other, flaring versus cold venting, that's a separate discussion, not fit for this show perhaps, but ultimately from a safety point of view, there's no question that flaring done safely in a controlled fashion is the better option by far. Now flaring was actually proposed on five separate occasions in the two years leading up to the accident. But due to production pressures and cost, they were never fitted. Because you'd have to be offline in order to fit them. Plus they would cost money to fit. Production was king. So they didn't. Prior to the startup, all of the alarms and indications were supposed to be checked. That's part of an internal BP procedure that existed at the time. But they were not performed. Pretty much for most of those 19 prior startups that the Chemical Safety Board investigated. They just skipped it. Then again, not sure how you would validate against the level without a functioning sight glass or an independently calibrated level transmitter or a test liquid. Alright, beyond that, handover. Another big problem. In the industry, a lot of companies have a policy that there must be a face-to-face handover, certainly we do, and detailed notes from changing shifts or swings. And that changeover period is crucial. Training budgets have been cut to a point where the training was delivered via a standard computerized system rather than face-to-face. And whilst computerized systems have their place for consistency and simplicity in many cases, budgetarily as well of course, but no, face-to-face allows question and answer and probing. It's essentially a poor replacement for a face-to-face training. Simulation. Process simulation. Now five years before this incident, simulators were recommended for operator training purposes. Unfortunately, due to cost, they were never implemented. They could have had a simulation system, much like my current employer does, where you can train operators in how to safely start up and shut down a plant. You can inject errors, faults into the system during startup and shutdown and test the operator to see how they respond by following the appropriate procedures and proving that they are paying attention during their training. Now the impacts of mergers and cost reductions as you can see from this incident, they aren't felt immediately. These things have a habit of taking time before they're felt. The same with preventative maintenance. The reason we do preventative maintenance is to make sure that when there is that one in 1,000 time when all of the other elements line up, so that these safety systems that are designed to protect us will function correctly. have a thing called CFTs and that's short for critical function testing. CFTs are run 6 to 12 month basis on most mining oil and gas plants. Why? Because you need to know that your safety system is going to operate when it should, how it should and they have to be done all the time, regularly. Production doesn't matter you have to make sure your CFT works. Fatigue prevention is another problem. There was no fatigue prevention policy at BP at the time, or in the industry as a whole at the time, but now there definitely is. So in closing, it may or may not be of interest, but on the 1st of February 2013, BP sold the refinery at Texas City to Marathon Petroleum Corporation. that ended BP's 15 years operating that facility. It is now called the Marathon Galveston Bay Refinery and still in operation today. I think it's important to reflect one last comment about this disaster and about organisational learnings and the value of experience and the cost of cutbacks. There was a statement made by a man called Trevor Kletz in 1993 in his book "Lessons from Disaster." He says, "Organizations have no memory. Only people have memory." We write reports. We do investigations, talk about everything that went wrong and how these people who are essentially innocent were killed because of an accident. I hate that word "accident". Because it suggests that, you know, it was accidental, it was, you know, but it wasn't, it could have been prevented. Like they don't call traffic accidents...accidents, I call them traffic collisions because of what it was it was a collision. Was this an accident? No, it was a disaster. And the disaster could have been prevented. If you're enjoying causality and want to support the show, you can like one of our backers, Chris Stone. He and many others are patrons of the show via Patreon and you can find it at https://patreon.com/johnchidgey all one word. So if you'd like to contribute something, anything at all, it's very much appreciated. This was Causality. I'm John Chidgey. Thanks for listening. (gentle music)

PANDORA

GOOGLE PODCASTS

INSTAGRAM

STITCHER

IHEART RADIO

TUNEIN RADIO

CASTBOX FM

OVERCAST

POCKETCASTS

PODCAST ADDICT

CASTRO

GAANA

JIOSAAVN

AMAZON

YOUTUBE

PANDORA

GOOGLE PODCASTS

INSTAGRAM

STITCHER

IHEART RADIO

TUNEIN RADIO

CASTBOX FM

OVERCAST

POCKETCASTS

PODCAST ADDICT

CASTRO

GAANA

JIOSAAVN

AMAZON

YOUTUBE