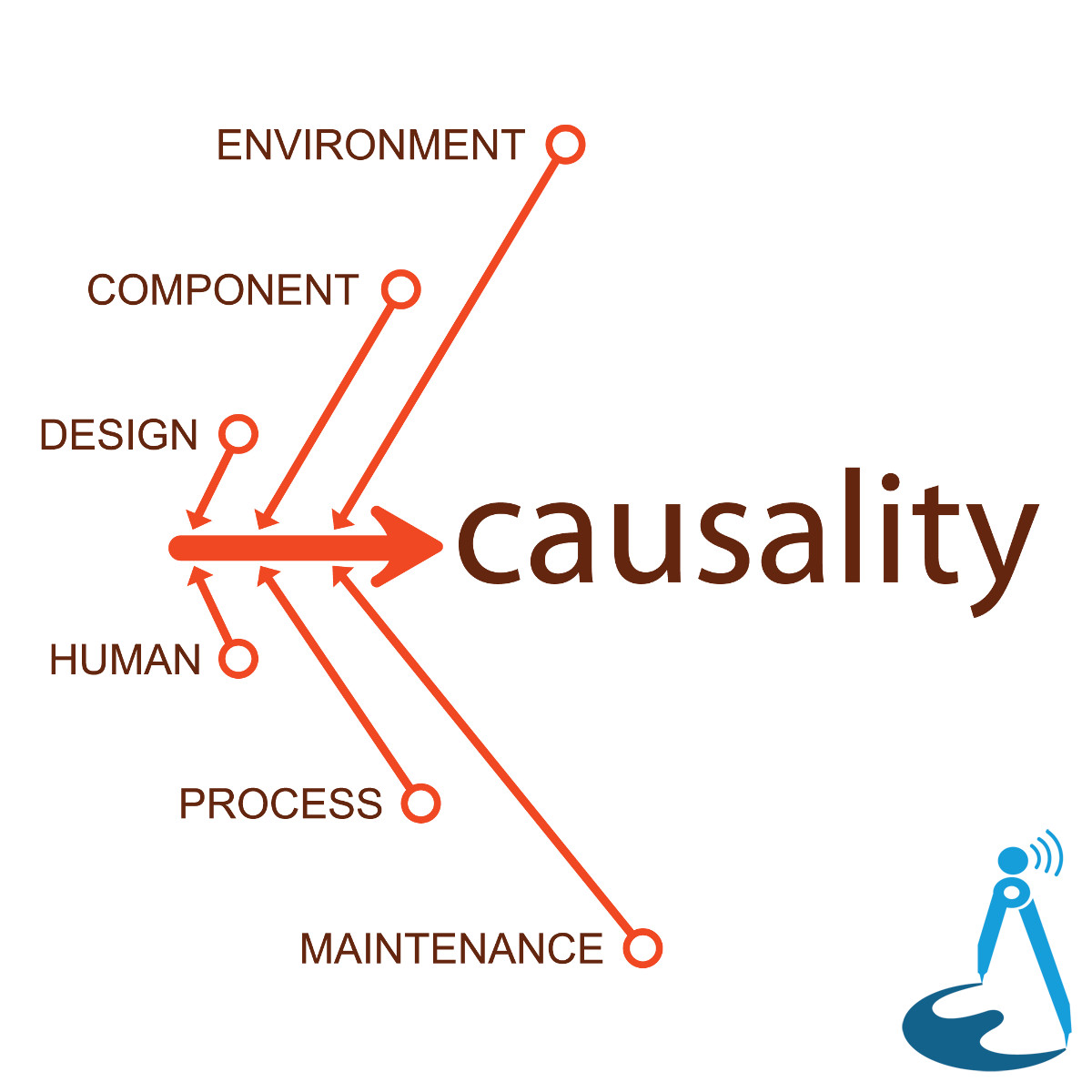

Causality 52: Colonial Pipeline

10 December, 2023Show Notes

Hearing:

- Hearing Before the Committee on Homeland Security House of Representatives Transcript

- Threats to Critical Infrastructure: Examining the Colonial Pipeline Cyber-Attack (PDF)

- Charles Carmakal Statement from Hearing (PDF)

General Information:

- Colonial Pipeline

- Colonial Pipeline Ransomware Attack

- How Three Major Cyber Attacks Could Have Been Prevented

- What IT security teams can learn from the Colonial Pipeline

- Evolution of the Chief Information Security Officer

- US Fuel Pipeline Hackers Statement

- DarkSide

- DarkSide Leaks Press Center (X)

- DarkSide Ransomware Gang Behind Pipeline Hack Quits

- TSA Renews Cyber-Security Guidelines for Pipelines

- DHS Announces New Cybersecurity Requirements for Critical Pipeline Owners and Operators

- Federal Motor Carrier Safety Administration

- Emergency Declaration for 17 States

Episode Gold Producers: 'r' and Steven Bridle.

Episode Silver Producers: Mitch Biegler, Shane O'Neill, Lesley, Jared Roman, Joel Maher, Katharina Will, Chad Juehring, Dave Jones, Kellen Frodelius-Fujimoto and Ian Gallagher.

Premium supporters have access to high-quality, early released episodes with a full back-catalogues of previous episodes

SUPPORT CAUSALITY PATREON APPLE PODCASTS SPOTIFY PAYPAL ME

STREAMING VALUE SUPPORT FOUNTAIN PODVERSE BREEZ PODFRIEND

CONTACT FEEDBACK REDDIT FEDIVERSE TWITTER FACEBOOK

LISTEN RSS PODFRIEND APPLE PODCASTS SPOTIFY

PANDORA

GOOGLE PODCASTS

INSTAGRAM

STITCHER

IHEART RADIO

TUNEIN RADIO

CASTBOX FM

OVERCAST

POCKETCASTS

PODCAST ADDICT

CASTRO

GAANA

JIOSAAVN

AMAZON

YOUTUBE

PANDORA

GOOGLE PODCASTS

INSTAGRAM

STITCHER

IHEART RADIO

TUNEIN RADIO

CASTBOX FM

OVERCAST

POCKETCASTS

PODCAST ADDICT

CASTRO

GAANA

JIOSAAVN

AMAZON

YOUTUBE

Comments

Causality 52: Colonial Pipeline https://engineered.network/causality/episode-52-colonial-pipeline with @chidgey #EngNetPeople

John Chidgey

John is an Electrical, Instrumentation and Control Systems Engineer, software developer, podcaster, vocal actor and runs TechDistortion and the Engineered Network. John is a Chartered Professional Engineer in both Electrical Engineering and Information, Telecommunications and Electronics Engineering (ITEE) and a semi-regular conference speaker.

John has produced and appeared on many podcasts including Pragmatic and Causality and is available for hire for Vocal Acting or advertising. He has experience and interest in HMI Design, Alarm Management, Cyber-security and Root Cause Analysis.

Described as the David Attenborough of disasters, and a Dreamy Narrator with Great Pipes by the Podfather Adam Curry.

You can find him on the Fediverse and on Twitter.